The digital landscape is changing at warp speed, and nowhere is this more evident than with the rise of AI video generators. Specifically, the emergence of "uncensored" AI models—those built without inherent programmatic filters or ethical guardrails—presents a daunting new frontier for ethical and legal implications of uncensored AI models. These sophisticated tools, leveraging the power of Generative Adversarial Networks (GANs) and diffusion models, offer unprecedented creative freedom. Yet, this very freedom also enables the rapid production of vast quantities of potentially harmful, misleading, or illicit content, fundamentally challenging our societal norms and legal frameworks.

This isn't just about what AI can do; it's about what we, as creators, users, developers, and policymakers, allow it to do without proper oversight.

At a Glance: Key Challenges with Uncensored AI Models

- Hyper-realistic Deepfakes: Effortlessly create convincing fake videos for misinformation, reputation damage, and political manipulation.

- Erosion of Trust: Widespread deepfakes lead to a "liar's dividend," where genuine content is dismissed, undermining public faith in media and institutions.

- Content Moderation Crisis: Existing filters are overwhelmed by the volume and sophistication of AI-generated harmful content.

- Non-Consensual Imagery (NCII): Lowered barriers for malicious actors to generate explicit deepfakes, causing severe victim distress.

- Identity Theft & Fraud: Convincing AI impersonations pose significant cybersecurity risks.

- Consent Challenged: AI training on public data often occurs without explicit individual consent, raising questions about digital likeness rights.

- Bias Amplification: Inherited biases in training data can lead to widespread discrimination and stereotypical representations.

- Copyright & Exploitation: Unclear authorship, uncompensated use of copyrighted material, and threats to human artists' livelihoods.

- "Black Box" Problem: The opaque nature of AI decision-making hinders accountability, bias detection, and effective regulation.

- Regulatory Lag: Existing laws are ill-equipped for the speed and transnational nature of AI misuse, demanding urgent international cooperation.

The Rise of Uncensored AI: A Double-Edged Sword

Imagine a tool that can instantly generate video footage of anything you describe, in any style, featuring anyone you choose. That's the promise—and the peril—of uncensored AI video generators. Unlike their more constrained counterparts, these models lack built-in ethical "stop signs." They don't have an AI's judgment based on societal norms or ethics to filter out harmful requests. This raw capability, while a boon for creative expression, simultaneously opens the floodgates for severe misuse. The line between reality and synthetic creation becomes so blurred that distinguishing one from the other often becomes impossible for the human eye, pushing a substantial burden onto developers, deployers, and even end-users to apply these powerful tools responsibly. You might already be thinking about how you could explore uncensored AI generator options for creative projects, but it's crucial to understand the landscape of risks first.

The Shadow of Deepfakes: Eroding Truth and Trust

Perhaps the most immediate and chilling concern is the proliferation of hyper-realistic deepfakes. These aren't just crude manipulations; they're sophisticated creations that can convincingly make a person appear to say or do things they never did. Picture a political leader delivering a fabricated speech, or a CEO making an announcement that tanks stock prices—all digitally invented.

Such deepfakes are virtually indistinguishable from genuine footage. They can be weaponized for potent misinformation campaigns designed to sway elections, destabilize political systems, manipulate financial markets, or utterly destroy individual reputations. The sheer speed at which these fakes can be generated and disseminated online means damage is often done long before any debunking efforts can take hold.

This phenomenon creates what experts call a "liar's dividend." When everything can be faked, people grow suspicious of everything. Genuine content might be dismissed as fake, eroding public trust in media, democratic processes, and even the integrity of personal interactions. The long-term societal cost of this pervasive suspicion is immeasurable, fostering division and making reasoned discourse incredibly difficult. To combat this, we need more than just technical fixes; robust media literacy initiatives are paramount, teaching individuals to critically evaluate digital content.

A Deluge of Harm: Content Moderation Under Siege

Social media platforms and online communities have long grappled with content moderation, but uncensored AI models introduce an unprecedented challenge. Imagine a torrent of harmful content—hate speech, graphic violence, harassment—being generated instantly and at scale. Existing filters and moderation systems, built for human-created or less sophisticated synthetic content, are ill-equipped to handle the sheer volume, novelty, and convincing sophistication of AI-generated media that intentionally bypasses their defenses.

This creates a scenario where platforms face immense pressure and significant liability questions. The necessary adaptive measures, often involving slow and costly human review for nuanced contexts, simply can't keep pace. This isn't just a technical problem; it's a societal one, highlighting the urgent need for collaborative solutions involving technology developers, platforms, policymakers, and civil society to create a more resilient digital environment.

Personal Violation: Non-Consensual Imagery and Identity Fraud

The misuse of uncensored AI extends into deeply personal and violating territory. One of the most egregious abuses is the creation of Non-Consensual Intimate Imagery (NCII), often referred to as "revenge porn," and deepfake harassment. These tools drastically lower the barrier for malicious actors to generate explicit content without any genuine source material. This means anyone can become a victim, suffering severe psychological, social, and professional repercussions. Legal frameworks around the world are struggling to keep pace, wrestling with complex questions of authorship, intent, and jurisdiction when a machine is involved in creating the offending material.

Beyond intimate imagery, convincing impersonations enabled by uncensored AI pose a significant cybersecurity threat. Imagine a deepfake video or audio call from your CEO instructing you to transfer funds, or a fake video of a loved one pleading for personal information. These can be used for sophisticated identity theft, financial fraud, and social engineering attacks, making traditional authentication methods vulnerable. Robust multi-factor authentication, advanced deepfake detection technologies, and widespread public awareness are no longer just good practices—they are critical to mitigating these increasingly sophisticated risks.

The Shifting Sands of Consent: Whose Likeness Is It Anyway?

At the heart of many of these ethical dilemmas lies a fundamental question: consent. AI models are often trained on colossal datasets comprising billions of publicly available images and videos. This means your face, your voice, your movements—or at least data derived from them—could be contributing to an AI's ability to generate synthetic media. The problem? This happens without any explicit individual consent for the specific purpose of synthetic media generation.

This practice diminishes an individual's control over their own digital likeness, creating a significant power imbalance. Opting out is technically difficult, if not impossible, once your data is part of a massive training set. Establishing clearer guidelines for consent is essential. This requires new data governance frameworks, the development of privacy-preserving AI technologies, and robust legal protections that acknowledge and safeguard digital likeness rights. We need systems that prioritize individual autonomy over data aggregation.

Mirroring Society's Flaws: Amplifying Bias and Discrimination

Uncensored AI video generators don't operate in a vacuum; they reflect the data they're trained on. If that training data contains societal biases—and it almost invariably does—the AI will learn and amplify those biases. This can manifest in insidious ways: stereotypical representations, the exclusion or underrepresentation of certain groups, or the association of particular demographics with harmful activities. The AI, without explicit ethical programming, will simply perpetuate and even normalize discriminatory attitudes.

This algorithmic discrimination isn't confined to abstract concepts. It can seep into critical areas like hiring algorithms, marketing campaigns, and the dissemination of social and political content. The "black box" problem, where the reasoning behind AI outputs is opaque, makes detecting and mitigating these biases incredibly challenging. Once embedded, discriminatory outputs can be disseminated at an unprecedented rate, further entrenching systemic inequalities. Mitigation requires a multi-pronged approach: careful data curation, advanced bias detection and mitigation techniques, robust fairness metrics, clear ethical AI guidelines, and strong regulatory oversight to ensure non-discrimination.

The Creative Conundrum: Copyright, Attribution, and Artist Rights

The intersection of uncensored AI with creative industries raises a labyrinth of complex legal and ethical questions. When an AI generates a new piece of art or video, who is the author? Is it the human who prompted the AI, the AI itself, or the original creators whose work formed the training data? This question of authorship is far from settled.

Then there's the issue of licensing. AI models are often trained on vast quantities of copyrighted material without explicit consent or compensation to the original creators. When the AI then produces derivative works that mimic artistic styles or content, does this infringe on existing copyrights? Furthermore, human artists, creators, and performers face potential exploitation. AI can devalue creative labor by rapidly producing high-quality content cheaply, threatening livelihoods. It enables the replication or impersonation of actors without their consent or compensation and allows for the sophisticated copying of unique artistic styles.

Addressing this demands a profound re-evaluation of the value of human creativity. It necessitates the development of new compensation models, robust digital likeness rights that give individuals control over their digital selves, and fostering symbiotic AI-human relationships that enhance, rather than diminish, creative expression. A globally harmonized approach to copyright law will be critical.

Peering into the "Black Box": The Challenge of AI Explainability

One of the most persistent hurdles in regulating and trusting advanced AI models is the "black box" problem. Often, particularly with complex deep learning systems, the reasoning behind an AI's output is opaque—even to its creators. We see the input, we see the output, but the internal decision-making process remains a mystery.

This lack of transparency profoundly hinders our ability to ensure accountability, detect and mitigate biases, and understand how malicious actors might exploit the system. If we don't understand why an AI produced a certain deepfake, how can we truly prevent it from happening again? From a regulatory perspective, it's difficult to craft effective laws for something that isn't fully understood. This opacity erodes public trust and makes it harder to secure adoption for beneficial AI applications. Developers have a moral imperative to invest heavily in Explainable AI (XAI) research, implement rigorous internal auditing practices, and be transparent about their models' limitations and capabilities.

Navigating the Legal Wild West: A Regulatory Maze

The "uncensored" nature of these AI models highlights a stark reality: existing legal frameworks are simply insufficient. They weren't designed for a world where synthetic media can be generated at scale, where authorship is ambiguous, and where harm can cross borders in milliseconds. The current default often places the burden on slow, reactive post-generation filtering, which is like trying to catch smoke.

Key issues include:

- Jurisdictional Complexities: If an AI model developed in one country generates a deepfake that harms someone in another, which laws apply?

- Defining AI-Generated Content: How do we legally distinguish between human and AI creation, especially when the AI is trained on human work?

- Assigning Liability: Who is responsible when harm occurs? The developer who created the model, the deployer who put it into use, or the end-user who initiated the harmful content?

- Balancing Innovation with Safety: How can regulations foster beneficial AI development without stifling innovation, while simultaneously protecting society from its harms?

The urgent need for international cooperation and harmonization of standards cannot be overstated. Without it, we risk creating "AI havens"—jurisdictions with lax regulations where harmful AI activities can flourish unchecked, exporting their problems globally. Translating ethical AI principles like fairness, transparency, and accountability into enforceable laws is a complex and ongoing task.

Building a Framework for Responsibility: Ethical AI Principles in Practice

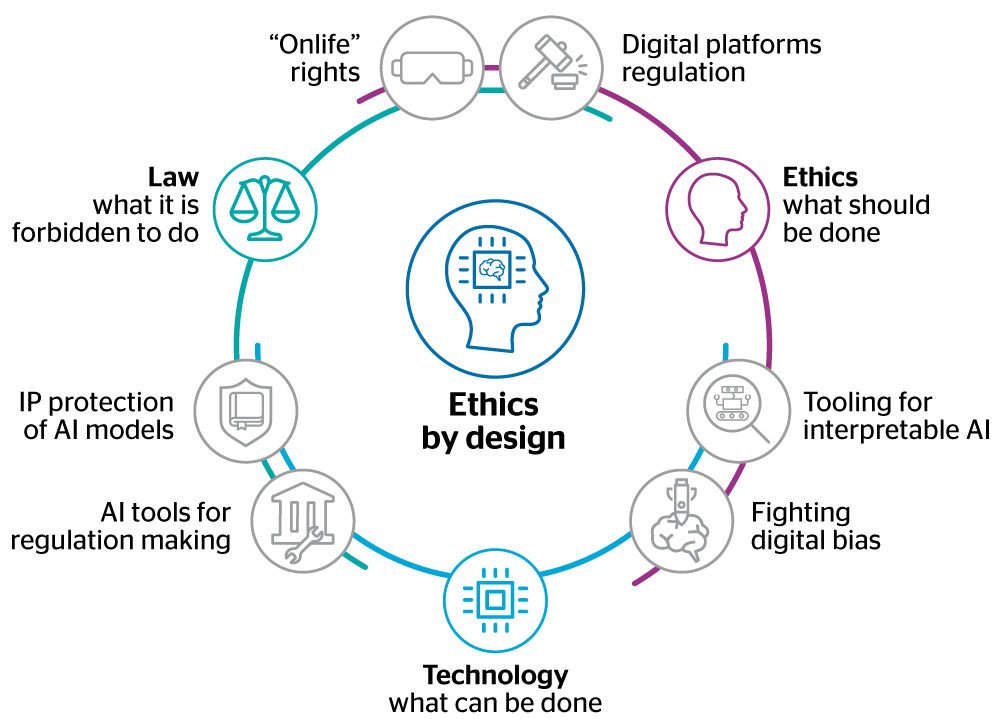

Given the slow pace of legal reform, ethical AI frameworks and industry standards play a crucial, albeit voluntary, role in guiding responsible development and deployment. These frameworks, often developed by consortia of experts, companies, and civil society organizations, promote principles like:

- Human Oversight and Control: Ensuring humans remain in the loop.

- Technical Robustness and Safety: Designing AI to be reliable and secure.

- Privacy and Data Governance: Protecting personal information.

- Transparency and Explainability: Making AI systems understandable.

- Fairness and Non-discrimination: Mitigating bias and ensuring equitable treatment.

- Societal and Environmental Well-being: Considering broader impacts.

- Accountability: Establishing clear responsibility for AI actions.

While not legally binding, these frameworks are instrumental in shaping corporate policies and fostering a culture of responsible AI. They provide a vital interim structure, bridging the gap between rapidly evolving technology and slower-moving legislation, and promoting a collective understanding of what constitutes ethical AI behavior.

Forging a Path Forward: Practical Steps for a Responsible AI Ecosystem

Addressing the complex ethical and legal implications of uncensored AI models requires a multi-stakeholder approach. No single entity can solve this alone.

For Developers and Researchers: Build with a Conscience

You are at the forefront of this technology. Your choices today will define tomorrow's digital world.

- Prioritize Safety by Design: Integrate ethical considerations and safety filters from the earliest stages of model development. Make "uncensored by default" the exception, not the rule.

- Invest in Explainable AI (XAI): Develop tools and techniques that allow for greater transparency into AI decision-making processes.

- Rigorous Bias Auditing: Proactively test models for bias in training data and outputs, implementing robust mitigation strategies.

- Transparency About Limitations: Clearly communicate what your AI models can and cannot do, and where they might be vulnerable to misuse.

- Explore Watermarking and Provenance: Develop methods to digitally watermark AI-generated content or create verifiable chains of custody to identify its synthetic nature.

For Platforms and Deployers: Protect Your Users and Your Integrity

As the gatekeepers and disseminators of content, you bear a significant responsibility.

- Strengthen Content Moderation: Continuously adapt and upgrade moderation systems, integrating advanced AI detection tools specifically trained to identify deepfakes and AI-generated harmful content.

- Rapid Takedown Policies: Implement clear and swift policies for the removal of illegal or harmful AI-generated content, especially NCII and targeted harassment.

- Develop Reporting Mechanisms: Make it easy for users to report suspected AI-generated abuse.

- Invest in AI Literacy Initiatives: Partner with educators and NGOs to help users understand the risks and how to identify synthetic media.

For Policymakers and Regulators: Craft Laws for the Future, Not the Past

Your role is to create a legal landscape that protects citizens while fostering innovation.

- Develop Clear Legal Definitions: Establish legal definitions for AI-generated content, deepfakes, and related concepts.

- Assign Liability: Clarify who is responsible for AI-generated harm across the development and deployment chain.

- Mandate Transparency: Consider requiring AI models to be auditable and for AI-generated content to be clearly labeled.

- Harmonize Internationally: Work with global partners to create consistent legal frameworks that prevent regulatory arbitrage and "AI havens."

- Invest in Research: Fund independent research into AI ethics, safety, and detection technologies.

For Users and the Public: Be Savvy, Be Critical

You are the first line of defense against misinformation and manipulation.

- Cultivate Media Literacy: Learn to critically evaluate online content. Question sources, check for inconsistencies, and be wary of highly sensational or emotionally charged material.

- Utilize Verification Tools: Familiarize yourself with emerging deepfake detection tools and fact-checking resources.

- Report Harmful Content: If you encounter illegal or deeply harmful AI-generated content, report it to the relevant platforms and authorities.

- Demand Accountability: Advocate for stronger regulations, ethical development, and responsible deployment of AI technologies.

Your Role in the AI Future: Navigating Uncensored Models Safely

The journey through the ethical and legal implications of uncensored AI models is complex and ongoing. We've seen how these powerful tools, while offering incredible creative potential, also introduce profound challenges to truth, trust, personal safety, and artistic integrity. From hyper-realistic deepfakes eroding public trust to the silent amplification of societal biases, the stakes couldn't be higher.

Navigating this new digital frontier isn't just a job for tech giants or governments; it's a collective responsibility. By understanding the risks, demanding transparency, advocating for robust legal frameworks, and developing critical media literacy, you play a vital role in shaping an AI future that serves humanity, rather than undermines it. The time to act and ensure that innovation is tempered with ethics and governed by clear legal principles is now.